Dev Update 15-Aug-2017

Hello! After some more hard crunchtime at my dayjob (with weekends and all), I could now finally return to indie dev again! Although I will be busy in September, the plan for now and the upcoming winter is to get lots of things done for the game! So, whats new? Well, I decided to concentrate more on gameplay. And one of the most important keystones to me, when we’re looking at the top down shooter genre, is enemy behavior.

Game “AI”

I actually don’t like to call game AI to be artificial intelligence, because in general game AI is just scripted behavior. It will not “learn”. It might adapt to the players playstyle over the course of a game, but to me, that’s still not AI. A learning AI is unpredictable and nobody wants that for a game, neither the developer nor the player (players just might not know). Instead, we want to learn the patterns, and be able to master predicting them. This enables us to get into the flow and dance the game’s choreography.

I am looking at a variety of inspirational sources for my AI. On one hand, lots of inspiration comes from Doom and its very chesslike approach, which is simple but brilliant: What can we learn from Doom | Game Maker’s Toolkit – Enemies have distinct movement and attack patterns and must be prioritized. The combination of different types and in different environments can create all fresh experiences.

On the other hand, I am fascinated by more complex individual and group behavior. Enemies should have a bigger skillset rather than just charge and bullet sponge – this is fine for zombies or similar, but based on their type there should be variation, so we can also learn their individual traits.

Behavior Trees

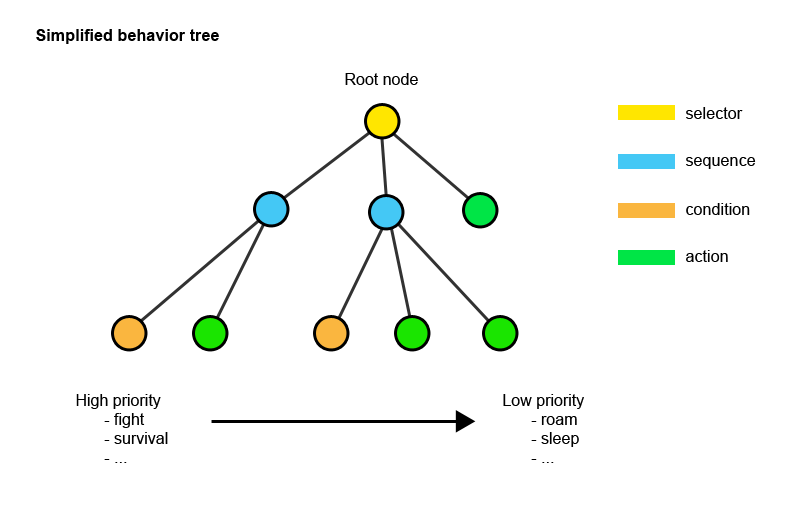

For Biosignature, I decided to implement AI by writing my own behavior tree system. Behavior tree driven AI is quite common in games and there are plenty of resources. Give this article a read if you want to learn more about it, but simply said, it is a statemachine, but in a hierarchical tree layout, where branches are usually either sequential or selective, and leafs are either conditions or actions. The AI works by contiuosly ticking along that tree and evaluates the right (valid) branches for a given scenario. The below scheme is pretty rough but I think you can get the idea from it:

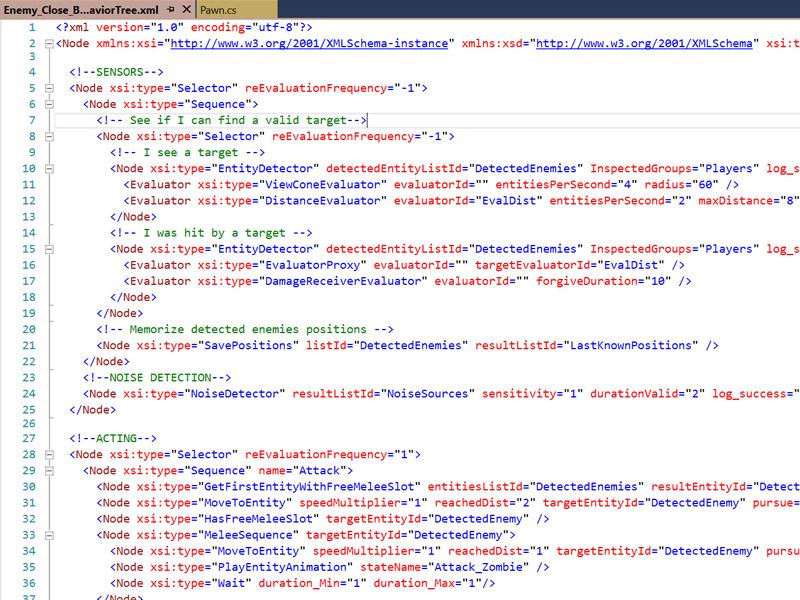

Actually there is also quite a good asset for this on the Unity Asset Store, Opsive’s Behavior Designer. I was using this at work and it is really great to get started and I’d recommend it to anyone looking into AI. However, with the Behavior Designer I still wrote most of the actual nodes myself, so it was really just the visual debugging that I could benefit from. I don’t want to trade my full control over the code for this, so thats why I rather like to implement my own system. I now use XML to write the actual trees and serialize that into the game, which works quite well so far:

I want to give you a brief overview of how I approach the implementation based on a simple example: Imagine a dangerous alien roaming around the area. You and your friends need to get past it. The alien doesn’t know you’re there yet…

Sense -> Think -> Act

These are the three pillars of AI. First, the alien needs to be enabled to sense it’s surroundings in some ways, by seeing, hearing, and feeling. Then, it can process that information to make decisions and select and execute from the available set of actions.

Sensors

So, knowing what’s happening around it is crucial for the alien. Now, there are different ways it will be able to spot you. For Biosignature, I implemented a whole set of different types of sensors (I call them detectors) that can be combined and tweaked to fit my requirements:

- On sight. You are within a view cone, you are not obscured by any cover, you are within a certain distance (also measured on the navmesh), you are lit(tbd).

- On touch. You are colliding with the AI agent.

- On receive damage. Imagine shooting and hitting the AI agent from behind.

- On percieve noise. Imagine shooting and missing, or bumping into something, or just making too much noise by running. The AI agent should start to investigate the position where the noise came from.

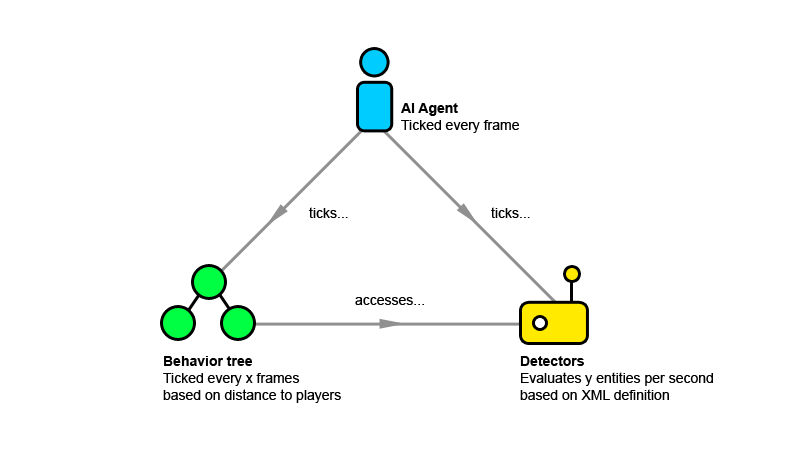

In implementation, we need to keep an eye on performance! Detectors like touch, damage or noise are event based and therefore cheap, as they only evaluate once triggered. But both the view cone and the distance checks are problematic: They need to be run continously, and such checks are pretty expensive when done on tick. Even more when we need to make the check for multiple entities like in a multiplayer scenario, and even more when there are many AI agents that run those checks.

Optimization: To counter this problem, I introduced asynchronous checks that run in background and keep evaluating on a much lower cost, while they still allow access on valid results at all time. Just like the tree itself, the checks can also be LOD’d – meaning that when an AI agent is more far away, like on the other side of the map, it will check much less often to keep the footprint low:

Decision

So in our example, you could either try to sneak out the alien or fight it. Let us now assume you failed sneaking past it so it is now attacking you and your friends!

Since you are in a group, the alien is able to spot more than one target at the same time. That means that each of these detectors does not only have to “detect” as such, but also calculate a value for each valid target. For example, the distance check value is better for closer targets, but can be combined with a damage inflicting value. I do now sum up all evaluation values for each target and pick the highest value. That means the AI is able to decide to pick the annoying sniper instead of the rushing tank.

However, to make decisions, a blank behavior tree is not enough! Let me explain. Nodes in behavior trees generally are implemented so that they clean up behind themselves, so that no matter in what order they are ticked, they may not lead to any unwanted behavior. Imagine a move node is interrupted midway because the agent was hit and a death animation starts playing, but the move node does not stop moving – uh oh. So we need to wipe all data and action on a node when we are done with it.

But you still need a place to store and share data between those nodes, the agents memory. This is usually called a blackboard. Every agent has its own blackboard, and every node of the agents behavior tree must be able to access it. For my blackboard implementation, I simply use a Dictionary<string, object> (varname, data). This way I am able to store data in the most generic way possible. I can name, write and fetch any data from the XML by just defining variable names and using “the right” nodes, means they just need to use the same data type.

So I can now save the best evaluated target into the blackboard, and on another branch in the tree, I check if that target is not null, and if so, it simply executes “moveto” and “attack” nodes on it.

Acting

The alien will now focus its prey with its dead eyes, and with a loud hiss it will sprint towards it to rip it apart. “Acting” is everything from movement to animation to playing audioclips on the AI agents.

Since Biosignature is a networked multiplayer game, all the evaluation and decision will only run on the server machine. All the acting however needs to be distributed to all the client machines. The challenge is now to write the system in a way that I can trigger as much acting as possible with as little actual game specific code added to the behavior tree code. While there is probably no way not circumvent some more game related nodes, there are many occasions to keep everything generic on this end. Imagine the alien is wounded and retreats in order to find heal(maybe by returning to the hive). So I’d just evaluate the best/closest “hive” entity position and execute “moveto”, maybe along with another audioclip, instead of adding a specific “findHive” node.

And thats it, the AI framework is pretty much completed now! Here you can see a proof of concept of a melee enemy that was done during development (graphics to be replaced!):

I will continue working on AI and enemies, and explore the possibity space of movement patterns and behaviors to create interesting gameplay. There will be a big break for me in september, so expect the next update somewhere in late october 🙂

See you next time!

Philipp 🙂

Leave a Reply